What is GPT-3? In this article, we'll try to answer this question along with other related topics such as:

- GPT-3 vs. ChatGPT

- History of GPT-3

- How GPT-3 is transforming the future of work: Use cases

- What are the benefits and limitations of GPT-3?

- Ethical side of GPT-3

But first, some context setting.

In 2011, IBM stunned the world when it debuted Watson, a room-sized supercomputer that could answer various questions. It was perceived pathbreaking in the AI space until researchers in big tech and academia started building large AI neural networks capable of processing billions of data points.

One such neural network machine learning model is GPT-3, which can generate any type of text. Unlike Watson, which is trained to do specific tasks (mostly answer questions), GPT-3 is truly “smart” and can create large volumes of relevant and contextual machine-generated text.

What is GPT-3?

GPT-3, or the third generation of Generative Pretrained Transformer, is a large language model developed by OpenAI. It is a generative model, which means that it can generate text that is similar to the content it was trained on, but not necessarily an exact copy.

Built on the success of previous AI models like GPT-2 and BERT, it is a neural network-based machine learning model that has been trained on a massive amount of text data to generate human-like text.

GPT-3 is one of the most powerful language models currently available, with 175 billion parameters. In AI models, parameters are similar to variables—the larger the number of parameters, the smarter the model is.

Here’s a perspective on AI model’s explosive growth:

- In 2017, AlphaGo Zero had a parameter size of 46 million.

- In 2018, Google’s BERT had 340 million parameters.

- In 2019, GPT-2 had 1.5 billion parameters compared to Facebook AI’s RoBERTa with 340 million and T5-11B with 11 billion parameters.

Because of 175 billion parameters, GPT-3 is capable of achieving state-of-the-art performance on a wide range of natural language processing tasks, such as text generation, translation, summarization, and more. It can further be fine-tuned for specific use cases, such as generating chatbot responses, summarizing content, or creating content for social media.

GPT-3 vs. ChatGPT?

When you discuss GPT-3, it’s hard to not talk about ChatGPT. While these two are large language models trained by OpenAI, they have some key differences in their capabilities and intended uses.

As explained in the earlier ‘what is GPT-3’ section, GPT-3 is a general-purpose language model that can be used for a wide range of tasks, such as text generation, translation, summarization, and more.

ChatGPT, on the other hand, is a specialized variant of GPT-3 that is specifically designed for generating natural and engaging responses in a conversation. It uses a variant of the GPT-3 architecture and has been optimized on a large dataset of conversational text.

ChatGPT is intended to be used in applications such as chatbots and virtual assistants, where it can generate responses that are appropriate and relevant to the context of the conversation.

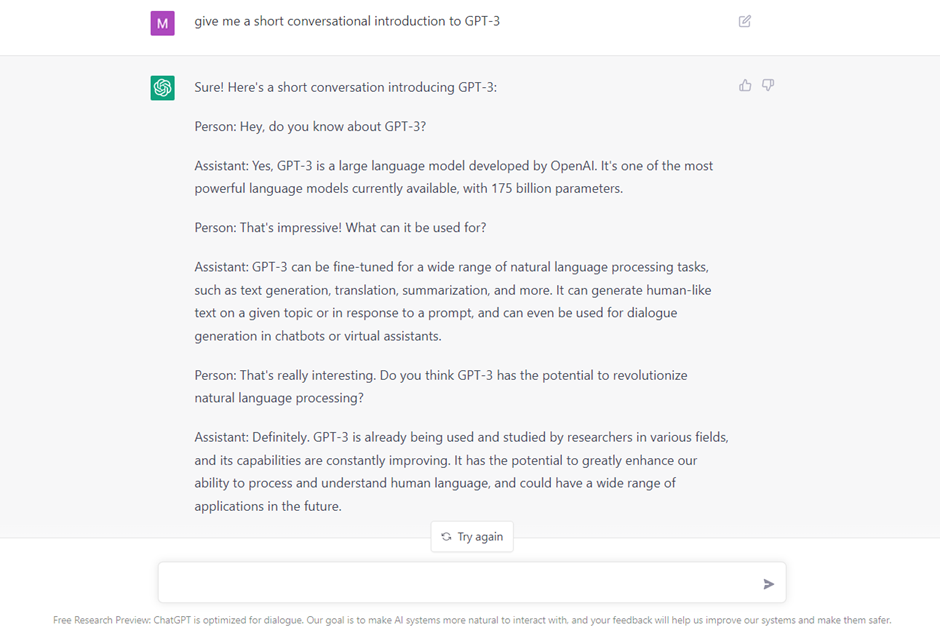

Here’s an example of ChatGPT

In summary, GPT-3 is a general-purpose language model with a wide range of capabilities, while ChatGPT is a specialized variant of GPT-3 that is optimized for generating conversational responses.

History of GPT-3

GPT-3 was developed in 2019 to create a more robust and versatile language model than its predecessors. It was trained on a massive amount of text data, including books, articles, and websites, to learn the patterns and structures of our language and perform a wide range of language-related tasks.

GPT-3 was officially announced in May 2020 and quickly attracted much attention and interest from the research community. It was one of the first AI models to reach 175 billion parameters, making it one of the most powerful and popular models currently available.

Since its release, GPT-3 has been widely used and studied by researchers. It has also sparked discussions and debates on the potential applications and implications of large language models in various fields.

How GPT-3 is transforming the future of work: Use cases

Now that we know what is GPT-3 and its history, here are some potential GPT-3 use cases:

Generate Text: You can use GPT-3 to create human-like text on a given topic or in response to a given prompt. Use it to generate content for social media, such as posts, comments, and replies, or to generate creative writing, such as stories, poems, or scripts.

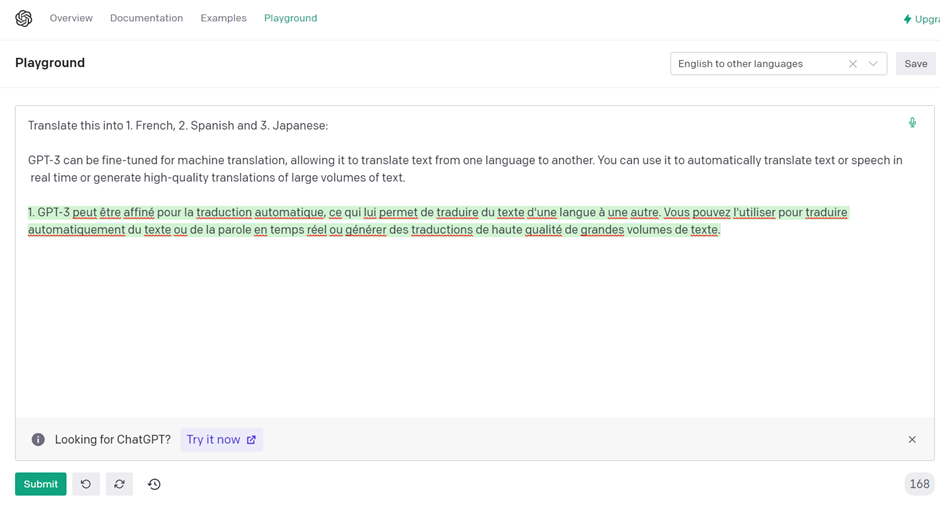

Translation: GPT-3 can translate text from one language to another. You can use it to automatically translate text or speech in real time or generate high-quality translations of large volumes of text.

Sentence completion: Suffering from writer's block? GPT-3 helps you overcome it by generating the missing words or phrases in a sentence, allowing users to complete sentences in real time.

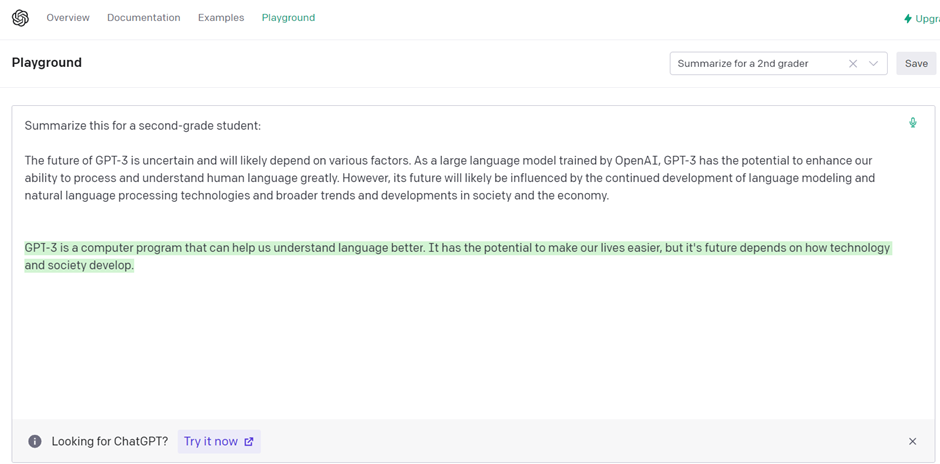

Summarization: You can use GPT-3 to summarize long texts, such as articles, reports, or books. You can use it to generate concise summaries of text for quick reference or to make large amounts of text easier to read and understand.

Named entity recognition: Use GPT-3 to identify and classify named entities in text, such as people, organizations, and locations. Automatically extract information from large amounts of text, such as to populate a knowledge base or database.

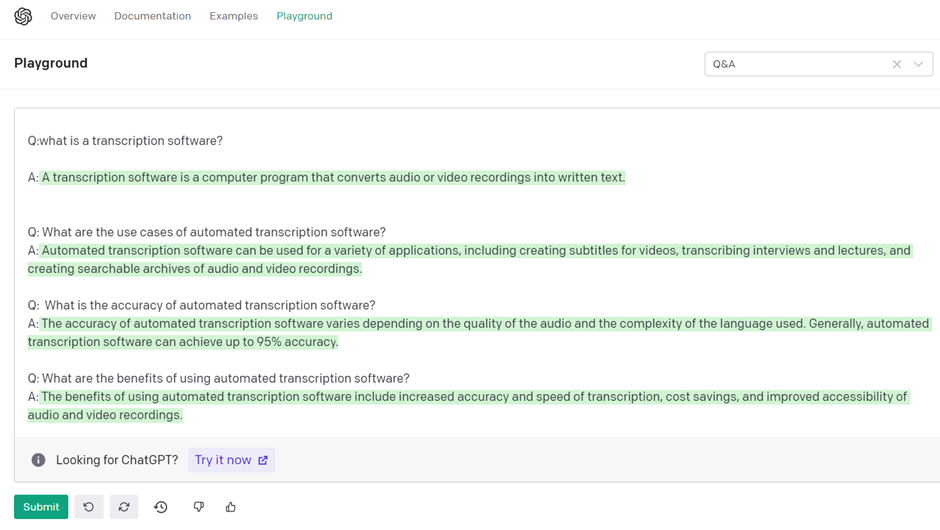

Question answering: GPT-3 can generate answers to natural language questions. Used it in applications such as virtual assistants or search engines, providing quick and accurate answers to their questions.

Dialogue generation: GPT-3 can generate appropriate and relevant responses in a conversation. Use it to power chatbots or virtual assistants that can engage in conversations with users.

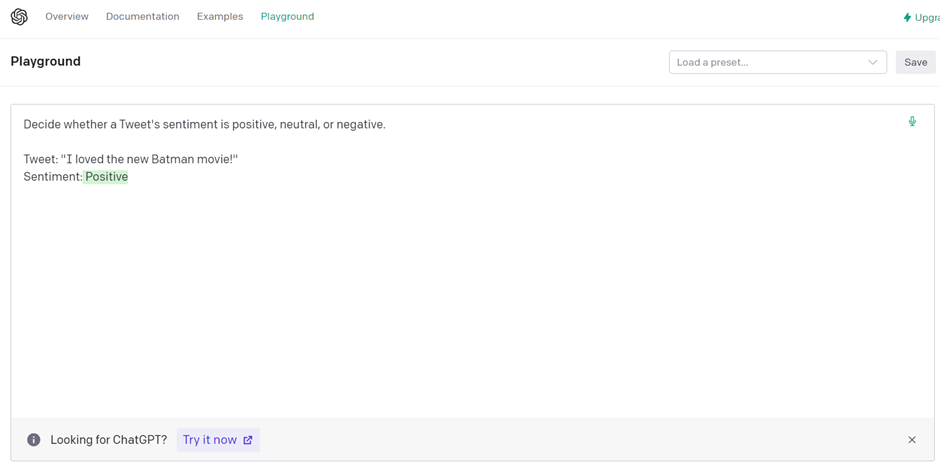

Sentiment analysis: GPT-3 can be used for sentiment analysis of text, such as whether it is positive, negative, or neutral. Use it to identify and automatically classify the sentiment of social media posts, customer reviews, or other forms of text.

What are the benefits of GPT-3?

In addition to knowing what is GPT-3 and its use cases, you must also know some potential benefits. These are:

Improved natural language processing: GPT-3 is one of the most powerful language models currently available, with 175 billion parameters. You can use it for various tasks, including generating text, summarizing, and translating.

Enhanced creativity and productivity: GPT-3 can generate human-like text on a given topic or in response to a prompt. You can use it to create content for social media, websites, or other applications, potentially enhancing creativity and productivity in various fields.

Improved customer service: Power chatbots or virtual assistants that can engage in conversations with users. GPT-3 can improve the customer experience and make it easier for businesses to provide timely and accurate responses to customer inquiries.

Enhanced accessibility and inclusivity: GPT-3 could be used to improve accessibility and inclusivity by automatically translating text or speech in real time or summarizing long texts to make them easier to read and understand.

Increased knowledge and understanding: GPT-3 could extract information from large amounts of text, such as populating a knowledge base or database. It could improve our ability to access and understand information and have various applications in different fields.

Overall, GPT-3 has the potential to enhance our ability to process and understand our language and has a wide range of benefits in various fields.

What are the limitations of GPT-3?

As with any technology, GPT-3 has some limitations and shortcomings.

One potential concern is the quality of the generated text, which can sometimes be incoherent or nonsensical. This is because language models like GPT-3 can only generate text based on the data they have been trained on, and the quality of the text can be affected by the quality and biases of the training data.

Additionally, GPT-3 can be expensive and resource-intensive, making them difficult for some organizations or individuals to use.

Finally, there are broader ethical concerns about the potential misuse of the technology, such as creating fake news or impersonating individuals online. While GPT-3 is a powerful and impressive technology, it is essential to consider its limitations and risks.

The ethical side of GPT-3

Like any digital transformation technology, GPT-3 also carries some risks and potential drawbacks, including:

Bias: GPT-3 is trained on a considerable volume of text data, which may include biases and stereotypes. This could lead GPT-3 to generate biased or unfair text, which could be harmful or offensive.

Misuse: GPT-3 can generate human-like text that can be used for malicious purposes like creating fake news or impersonating someone else online. This could lead to confusion and harm the credibility of legitimate sources of information.

Dependence: GPT-3 has the potential to enhance our ability to process and understand human language significantly. However, it also carries the risk of creating a reliance on technology, where people may rely too heavily on GPT-3 and lose their ability to think and communicate effectively.

The future of GPT-3

The future of GPT-3 is uncertain and will likely depend on various factors. While it has the potential to enhance our ability to process and understand human language greatly, its future will likely be influenced by the continued development of language modeling and other technologies as well as broader trends and developments in society and the economy.

One potential future for GPT-3 is that it will continue to be used and studied by researchers in different fields. It could lead to the developing of new applications for GPT-3 and other language models.

Another potential future for GPT-3 is that it will be used more widely in industry and commerce for content creation, customer service, and data analysis.

Overall, the future of GPT-3 is promising, and as this technology advances, it will only become better.